Audio By Carbonatix

Artificial intelligence could lead to the extinction of humanity, experts - including the heads of OpenAI and Google Deepmind - have warned.

Dozens have supported a statement published on the webpage of the Centre for AI Safety.

"Mitigating the risk of extinction from AI should be a global priority alongside other societal-scale risks such as pandemics and nuclear war" it reads.

But others say the fears are overblown.

Sam Altman, chief executive of ChatGPT-maker OpenAI, Demis Hassabis, chief executive of Google DeepMind and Dario Amodei of Anthropic have all supported the statement.

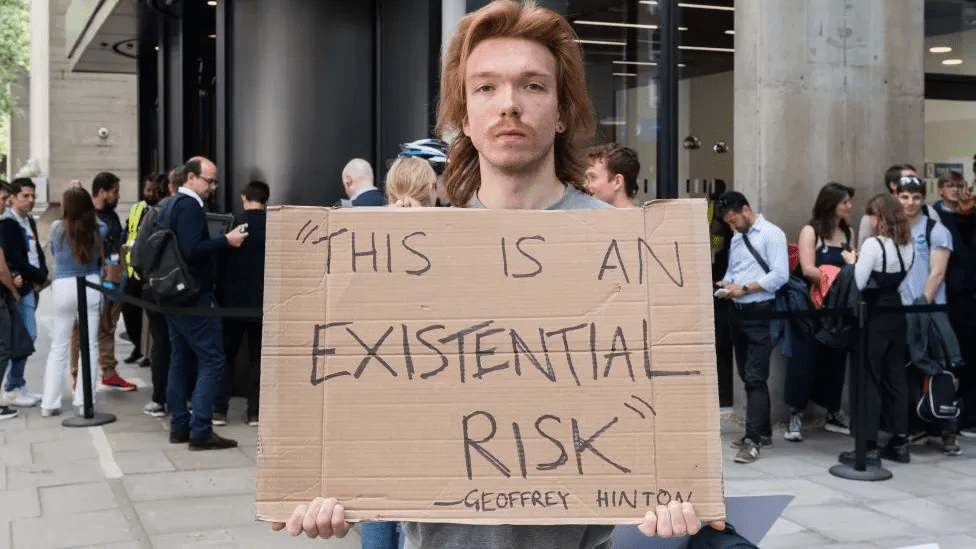

Dr Geoffrey Hinton, who issued an earlier warning about risks from super-intelligent AI, has also supported the call.

Yoshua Bengio, professor of computer science at the University of Montreal, also signed.

Dr Hinton, Prof Bengio and NYU Professor Yann LeCunn are often described as the "godfathers of AI" for their groundbreaking work in the field - for which they jointly won the 2018 Turing Award, which recognises outstanding contributions in computer science.

But Prof LeCunn, who also works at Meta, has said these apocalyptic warnings are overblown.

This is absolutely correct.

— Yann LeCun (@ylecun) May 4, 2023

The most common reaction by AI researchers to these prophecies of doom is face palming. https://t.co/2561GwUvmh

Many other experts similarly believe that fears of AI wiping out humanity are unrealistic, and a distraction from issues such as bias in systems that are already a problem.

Arvind Narayanan, a computer scientist at Princeton University, has previously told the BBC that sci-fi-like disaster scenarios are unrealistic: "Current AI is nowhere near capable enough for these risks to materialise. As a result, it's distracted attention away from the near-term harms of AI".

Pause urged

Media coverage of the supposed "existential" threat from AI has snowballed since March 2023 when experts, including Tesla boss Elon Musk, signed an open letter urging a halt to the development of the next generation of AI technology.

That letter asked if we should "develop non-human minds that might eventually outnumber, outsmart, obsolete and replace us".

In contrast, the new campaign has a very short statement, designed to "open up discussion".

The statement compares the risk to that posed by nuclear war. In a blog post OpenAI recently suggested superintelligence might be regulated in a similar way to nuclear energy: "We are likely to eventually need something like an IAEA [International Atomic Energy Agency] for superintelligence efforts" the firm wrote.

'Be reassured'

Both Sam Altman and Google chief executive Sundar Pichai are among technology leaders to have discussed AI regulation recently with the prime minister.

Speaking to reporters about the latest warning over AI risk, Rishi Sunak stressed the benefits to the economy and society.

"You've seen that recently it was helping paralysed people to walk, discovering new antibiotics, but we need to make sure this is done in a way that is safe and secure," he said.

"Now that's why I met last week with CEOs of major AI companies to discuss what are the guardrails that we need to put in place, what's the type of regulation that should be put in place to keep us safe.

"People will be concerned by the reports that AI poses existential risks, like pandemics or nuclear wars.

"I want them to be reassured that the government is looking very carefully at this."

He had discussed the issue recently with other leaders, at the G7 summit of leading industrialised nations, Mr Sunak said, and would raise it again in the US soon.

The G7 has recently created a working group on AI.

Latest Stories

-

US Visa Suspension: Abu Jinapor warns of diplomatic drift as Ghana–US relations face strain

45 minutes -

NPP flagbearer race: Bawumia stands tall—Jinapor

1 hour -

Akufo-Addo neutral in NPP flagbearer contest—Abu Jinapor

1 hour -

NPA commends Tema Oil Refinery for swift return to full operation

1 hour -

No 24-hour shift in 2020 – Ghana Publishing clarifies former MD’s claim

1 hour -

Ghana U20 midfielder Hayford Adu-Boahen seals five-year deal with FC Ashdod

1 hour -

Fuel prices set to go down marginally at pumps from January 16

2 hours -

Measured diplomacy, not hot-headed statements, should guide Ghana’s foreign policy – Abu Jinapor

2 hours -

Galamsey fight unsatisfactory – Abu Jinapor slams government

2 hours -

We need to move away from religion and tribal politics – Abu Jinapor

2 hours -

Iran judiciary denies plan to execute detained protester Erfan Soltani

2 hours -

Swiss bar employee who reportedly held sparkler unaware of dangers, family says

2 hours -

European military personnel arrive in Greenland as Trump says US needs island

2 hours -

Gushegu MP Alhassan Tampuli hands over rebuilt girls’ dormitory, expands scholarship scheme

2 hours -

UNESCO delegation pays working visit to GIFEC

3 hours