Audio By Carbonatix

Chatbot site Character.ai is cutting off teenagers from having conversations with virtual characters, after facing intense criticism over the kinds of interactions young people were having with online companions.

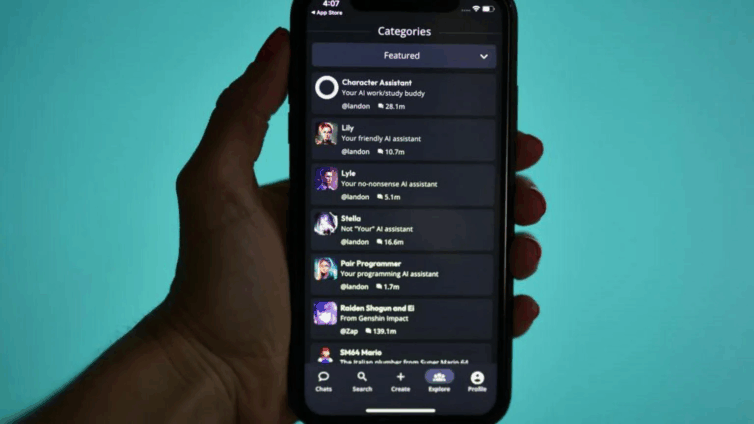

The platform, founded in 2021, is used by millions to talk to chatbots powered by artificial intelligence (AI).

But it is facing several lawsuits in the US from parents, including one over the death of a teenager, with some branding it a "clear and present danger" to young people.

Now, Character.ai says that from 25 November, under-18s will only be able to generate content such as videos with their characters, rather than talk to them as they can currently.

Online safety campaigners have welcomed the move but said the feature should never have been available to children in the first place.

Character.ai said it was making the changes after "reports and feedback from regulators, safety experts, and parents", which have highlighted concerns about its chatbots' interactions with teens.

Experts have previously warned that the potential for AI chatbots to make things up, be overly encouraging, and feign empathy can pose risks to young and vulnerable people.

"Today's announcement is a continuation of our general belief that we need to keep building the safest AI platform on the planet for entertainment purposes," Character.ai boss Karandeep Anand told BBC News.

He said AI safety was "a moving target" but something the company had taken an "aggressive" approach to, with parental controls and guardrails.

Online safety group Internet Matters welcomed the announcement, but it said safety measures should have been built in from the start.

"Our own research shows that children are exposed to harmful content and put at risk when engaging with AI, including AI chatbots," it said.

Character.ai has been criticised in the past for hosting potentially harmful or offensive chatbots that children could talk to.

Avatars impersonating British teenagers Brianna Ghey, who was murdered in 2023, and Molly Russell, who took her life at the age of 14 after viewing suicide material online, were discovered on the site in 2024 before being taken down.

Later, in 2025, the Bureau of Investigative Journalism (TBIJ) found a chatbot based on paedophile Jeffrey Epstein which had logged more than 3,000 chats with users.

The outlet reported the "Bestie Epstein" avatar continued to flirt with its reporter after they said they were a child. It was one of several bots flagged by TBIJ that were subsequently taken down by Character.ai.

The Molly Rose Foundation - which was set up in memory of Molly Russell - questioned the platform's motivations.

"Yet again it has taken sustained pressure from the media and politicians to make a tech firm do the right thing, and it appears that Character AI is choosing to act now before regulators make them," said Andy Burrows, its chief executive.

Wake-up call

Mr Anand said the company's new focus was on providing "even deeper gameplay [and] role-play storytelling" features for teens - adding these would be "far safer than what they might be able to do with an open-ended bot".

New age verification methods will also come in, and the company will fund a new AI safety research lab.

Social media expert Matt Navarra said it was a "wake-up call" for the AI industry, which is moving "from permissionless innovation to post-crisis regulation".

"When a platform that builds a teen experience still then pulls the plug, it's saying that filtered chats aren't enough when the tech's emotional pull is strong," he told BBC News.

"This isn't about content slips. It's about how AI bots mimic real relationships and blur the lines for young users," he added.

Mr Navarra also said the big challenge for Character.ai will be to create an engaging AI platform which teens still want to use, rather than move to "less safe alternatives".

Meanwhile, Dr Nomisha Kurian, who has researched AI safety, said it was "a sensible move" to restrict teens using chatbots.

"It helps to separate creative play from more personal, emotionally sensitive exchanges," she said.

"This is so important for young users still learning to navigate emotional and digital boundaries.

"Character.ai's new measures might reflect a maturing phase in the AI industry - child safety is increasingly being recognised as an urgent priority for responsible innovation."

Latest Stories

-

Middle East crisis: Ablakwa assures all Ghanaians will be supported

19 seconds -

Voting underway in Ayawaso East as over 49,000 voters head to polls across 113 centres

10 minutes -

Bond market: Turnover rose by 43% to GH¢2.98bn

10 minutes -

Banks wrote off GH¢1.64 billion in 2025, NPL stock hits GH¢21.0 billion – BoG

15 minutes -

Let’s brace ourselves for Middle East war fallout—President Mahama to African leaders

15 minutes -

China removes three retired generals from national advisory body

17 minutes -

Andre Ayew’s 2026 World Cup inclusion won’t surprise me – Kofi Adams

18 minutes -

World Sustainability Organization launches Friend of the Earth sustainable packaging certification in Ghana

32 minutes -

14-year-old boy seriously injured following alleged abuse in Ashanti Region

37 minutes -

Nana Agradaa walks free from prison after release

41 minutes -

Man arrested for alleged assault after accident at Maamobi

50 minutes -

Government urged to review compensation fund to support vulnerable accident victims

53 minutes -

Photos: Hasaacas Ladies beat Army Ladies to go top of WPL table

53 minutes -

Let’s fix flaws in our democratic governance to preserve the 4th Republic – Boakye Agyarko

54 minutes -

Market ready for bond issuance after fiscal shock therapy — Prof Bokpin

59 minutes