Google is trialling a digital watermark to spot images made by artificial intelligence (AI) in a bid to fight disinformation.

Developed by DeepMind, Google's AI arm, SynthID will identify images generated by machines.

It works by embedding changes to individual pixels in images so watermarks are invisible to the human eye but detectable by computers.

But DeepMind said it is not "foolproof against extreme image manipulation".

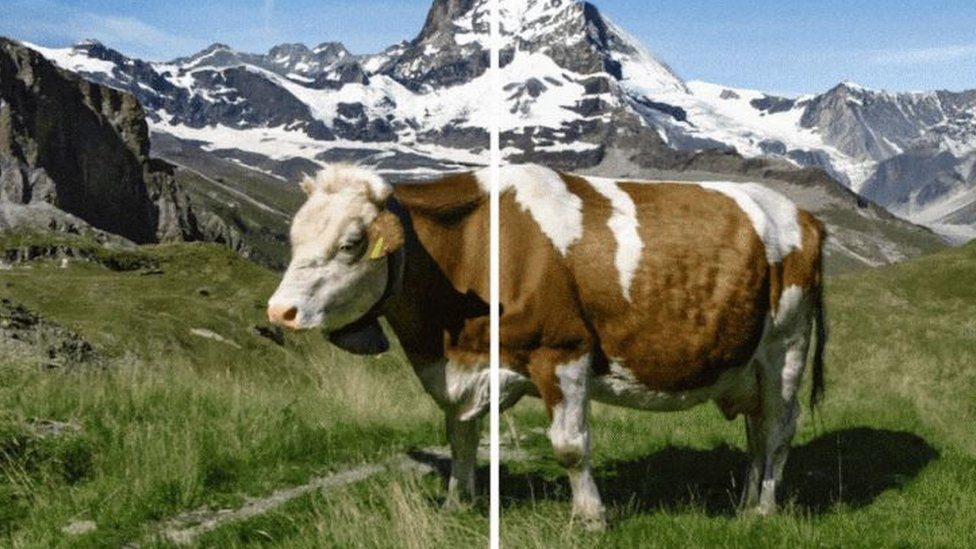

As the technology evolves, it is becoming increasingly more complex to tell the difference between real images and artificially-generated ones - as BBC Bitesize's AI or Real quiz shows.

AI image generators have become mainstream, with the popular tool Midjourney boasting more than 14.5m users.

They allow people to create images in seconds by inputting simple text instructions, leading to questions over copyright and ownership worldwide.

Google has its own image generator called Imagen, and its system for creating and checking watermarks will only apply to images created using this tool.

Invisible

Watermarks are typically a logo or text added to an image to show ownership, as well as partially to make it trickier for the picture to be copied and used without permission.

It is in images used on the BBC News website, which usually include a copyright watermark in the bottom-left corner.

But these kinds of watermarks are not suitable for identifying Al-generated images because they can easily be edited or cropped out.

Tech companies use a technique called hashing to create digital "fingerprints" of known videos of abuse, so they can spot them and remove them quickly if they start to spread online. But these too can become corrupted if the video is cropped or edited.

Google's system creates an effectively invisible watermark, which will allow people to use its software to find out instantly whether the picture is real or made by machine.

Google Deepmind

Pushmeet Kohli, head of research at DeepMind, told the BBC its system modifies images so subtly "that to you and me, to a human, it does not change".

Unlike hashing, he said even after the image is subsequently cropped or edited, the firm's software can still identify the presence of the watermark.

"You can change the colour, you can change the contrast, you can even resize it... [and DeepMind] will still be able to see that it is AI-generated," he said.

But he cautioned this is an "experimental launch" of the system, and the company needs people to use it to learn more about how robust it is.

Standardisation

In July, Google was one of seven leading companies in artificial intelligence to sign up to a voluntary agreement in the US to ensure the safe development and use of AI, which included ensuring that people are able to spot computer-made images by implementing watermarks.

Mr Kohli said this was a move which reflected those commitments but Claire Leibowicz, from campaign group Partnership on AI, said there needs to be more coordination between businesses.

"I think standardisation would be helpful for the field," she said.

"There are different methods being pursued, we need to monitor their impact - how can we get better reporting on which are working and to what end?

"Lots of institutions are exploring different methods, which adds to degrees of complexity, as our information ecosystem relies on different methods for interpreting and disclaiming the content is AI-generated," she said.

Microsoft and Amazon are among the big tech companies which, like Google, have pledged to watermark some AI-generated content.

Beyond images, Meta has published a research paper to its unreleased video generator Make-A-Video, which says watermarks will be added to generated videos to meet similar demands for transparency over AI-generated works.

China banned AI-generated images without watermarks altogether at the start of this year, with firms like Alibaba applying them to creations made with its cloud division's text-to-image tool, Tongyi Wanxiang.

Latest Stories

-

FIFA Club World Cup 2025: Sundowns, Esperance join Al Ahly and Wydad as CAF representatives

3 hours -

CAFCL: Al Ahly set up historic final with ES Tunis

3 hours -

We didn’t sneak out 10 BVDs; they were auctioned as obsolete equipment – EC

7 hours -

King Charles to resume public duties after progress in cancer treatment

8 hours -

Arda Guler scores on first start in La Liga as Madrid beat Real Sociedad

8 hours -

Fatawu Issahaku’s Leicester City secures Premier League promotion after Leeds defeat

8 hours -

Anticipation builds as Junior Speller hosts nationwide auditions

9 hours -

Etse Sikanku: The driver’s mate conundrum

9 hours -

IMF Deputy Chief worried large chunk of Eurobonds is used to service debt

9 hours -

Otumfuo Osei Tutu II celebrates 25 years of peaceful rule on golden stool

9 hours -

We have enough funds to pay accruing benefits; we’ve never missed pension payments since 1991 – SSNIT

10 hours -

Let’s embrace shared vision and propel National Banking College – First Deputy Governor

10 hours -

Liverpool agree compensation deal with Feyenoord for Slot

11 hours -

Ejisu by-election: There’s no evidence of NPP engaging in vote-buying – Ahiagbah

11 hours -

Ejisu by-election: Independent ex-NPP MP’s campaign team warns party against dubious tactics

11 hours